Detecting Information Pollution, Fake News & Harmful Content

In a set of famous experiments, psychologists Elihu Katz, Paul F. Lazarsfeld, and Elmo Roper measured, in the field of marketing communication, how a small story was changed while passing from person to person.

At every step, participants added details that often were completely fabricated, and after a few turns the original story was lost and replaced by a totally new one. Interestingly, this new story was richer in details, and more likely to attract attention from people: the story became more “viral”.

When a piece of content becomes viral, not only it’s more easily and widely shared, but its emotional characteristics are prone to create a more durable impression. In fact, viral content is something that is often emotionally charged, sparkling controversy or diffusing fake news.

The origin of many conspiracy theories can be traced back to these interesting stories that reach the status of “memes” , the genes of the information that compete, evolve and interbreed, turning into a form of an entire (popular) culture.

Information Pollution & Social Media: Memes with Consequences

This phenomenon of information pollution can lead to serious consequences if amplified by social network platforms. In fact, the network effect multiplies the possibility of diffusion for both legitimate and fake news in an exponential way.

Scientists have demonstrated the formation of the “echo chambers”, i.e. communities of people that tend to believe in common narratives, often related to conspiracy theories and mainly belonging to just a few semantic domains: environment, diet, health, and geopolitics.

These closed communities where users with similar taste and ideas spread misinformation have been seen as both an opportunity by canny politicians and a threat to democracy or finance.

Specifically in finance, the risk of misinformation has led to concerns about market stability - as the extreme sensitivity of stock markets to rumours demonstrate.

A lot of the volatility on stock prices, with sudden jumps and large movements, can be explained by the effect of news, their frequency, and their emotional content (aka sentiment polarity of the news).

How to Spot Dangerous Memes

But what are the determinants that make a message viral and potentially harmful?

SNP: Social Networking Potential

Scientists believe that every person in a social network has a “social networking potential”, or SNP (check for instance this source) - a specific ability to influence his/her own neighbours.

Individuals with high SNP are often targeted by viral advertisements as they can help spread a message faster and better than the ones with a lower score. In many cases these people are “influencers” that do this activity professionally and care a lot about their social circles.

In social networks analysis measures of SNP are related to the concept of centrality, i.e. a measure of position and influence of a node given its location on the network.

Trivially, a simple measure of centrality is the number of links - in social media platforms, for instance, this translates to the number of followers, or the number of friends.

In this specific case, users with many connections tend to be trusted more than users with just a few of them. High SNP generates trust and confidence, making passing a message easier and more effective.

Emotional Content

Besides SNP, another factor that amplifies the diffusion speed of a message is its emotional content (the mood of a sentence) that can further increase this process. Charging messages with emotions is a common practice in marketing campaigns.

Advertisers use all sorts of emotional tricks (for instance tell stories involving kids, family, freedom etc.) to send messages that are non-rational and charged with unconscious references to specific positive (or conversely, negative) emotions - with the goal of making an impression last longer.

Sentiment in a text can be measured using sentiment analysis tools, specialised softwares or software libraries that can estimate the polarity of the content, i.e. its inclination to be positive, neutral or negative.

Polarisation & Misinformation

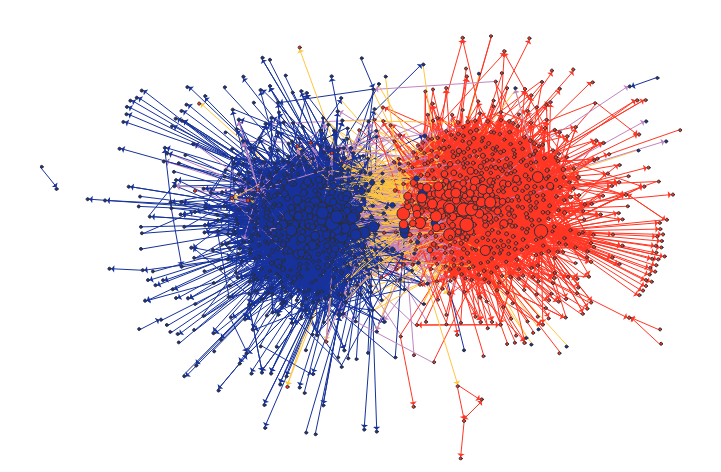

In social networks, messages that go viral often originate from polarised communities. The concept of polarisation refers to communities in which the network of discussion appears to be split between two or more sides, and that are likely to spread misinformation.

Measuring polarisation in online communities is a good predictor of the tendency to spread news and rumours. If a community is deeply divided and emotional content starts to prevail over more reasonable voices, messages will move faster, percolate the social network, and lead to the original community generating potentially harmful content - which as we saw may increase market volatility or even damage the political debate.

In this picture from (an article of Lada Adamic, Divided by the blog) a visualisation of two divided political communities of a political blog. In modern times, political leaders have learned how to take advantage of this kind of unverified rumours, or “fake news.”

People believing in conspiracy theories, and more than often also marketer and political leaders during campaigns tend to use controversial content, charged with sentiments, their messages spread like wildfire and polarised the public opinion for a long time.

Detecting Information Pollution

As we’ve seen, in order to detect information pollution, fake news, and harmful rumours, you should consider:

- The social networking potential of individuals and the total SNP of the communities they belong to

- The emotional content of a message

- How polarised the community is

Given the scale of the problem - the sheer number of existing messages and the massive volume of news that is produced daily - it’s only feasible to detect fake news with automated classification tools.

Unfortunately, there’s no silver bullet. Currently, no single tool - or even human judgement - is able to detect 100% of fake news. There’s a small but significant chunk of information that resists classification - messages that are wrongly labelled as “not fake news” according to the machine learning algorithms.

This subset of fake news is currently able to continue to spread and pollute the information space. Rumours cannot be stopped, but at least they can be measured and damages contained. To accomplish this task the listening of social networks (aka social listening) has to be carried out. In fact, while the new era of digital communication with its amplification effect has a new unprecedented ability of spreading fake news, at least it has the advantage to track back content and make it possible for an early response.

.png)